Introduction

Are you asking yourself what is bias in machine learning?

Have you ever wondered why your favorite online streaming service sometimes recommends movies or shows that don’t quite match your interests? This could be due to what is bias in machine learning.

Bias is a systematic error that skews the results of algorithms, leading to less reliable predictions and outcomes. In this blog, we’ll guide you through understanding what bias in machine learning really means, how it impacts the results you encounter every day, and ways to reduce its influence for more accurate modeling.

Get ready to unlock insights on what is bias in machine learning!

Here are other relevant article about machine learning that you must read next!

- Machine Learning For Kids: This is What Your Child Needs to Know in 2024

- What Is Recall In Machine Learning: Best Guide In 2024

- What Is Recall In Machine Learning: Best Guide In 2024

Table of Contents

Key Takeaways – What is Bias in Machine Learning?

- Bias in machine learning happens when assumptions and mistakes guide the AI system, leading to unfair or inaccurate outcomes. It’s like starting an experiment with the wrong idea.

- Regularization techniques and cross-validation help reduce bias and variance, making AI models more reliable. These methods fine-tune model details to improve predictions on new data.

- Feature engineering is key for minimizing bias and improving model performance by carefully selecting and transforming input data. This helps the AI learn better from the information given.

What is Bias and Variance in Machine Learning

Bias and variance are crucial factors in machine learning. Understanding these concepts is fundamental for building accurate and robust models.

What is Bias in Machine Learning?

Bias in machine learning is like when your science fair project experiment doesn’t go as planned because you assumed something wrong from the start. It’s a big mistake that happens during the making of AI systems, where the computer thinks in a way that’s off track because the people who made it or the data used to teach it weren’t spot on.

This can lead machines to make guesses that aren’t accurate, leading to unfair or prejudiced results. For example, if an AI system is taught with pictures of mostly one type of dog breed, it might get confused and wrongly label other breeds.

What is Variance in Machine Learning?

Variance is another issue about how much an AI’s guesses change if you teach it with different sets of data. Think about practicing basketball shots from different spots on the court; some days you miss more than others based on where you’re standing.

In machine learning, high variance means an algorithm does really well with its training data but misses the mark when given new info because it was too focused on remembering every little detail rather than understanding general rules.

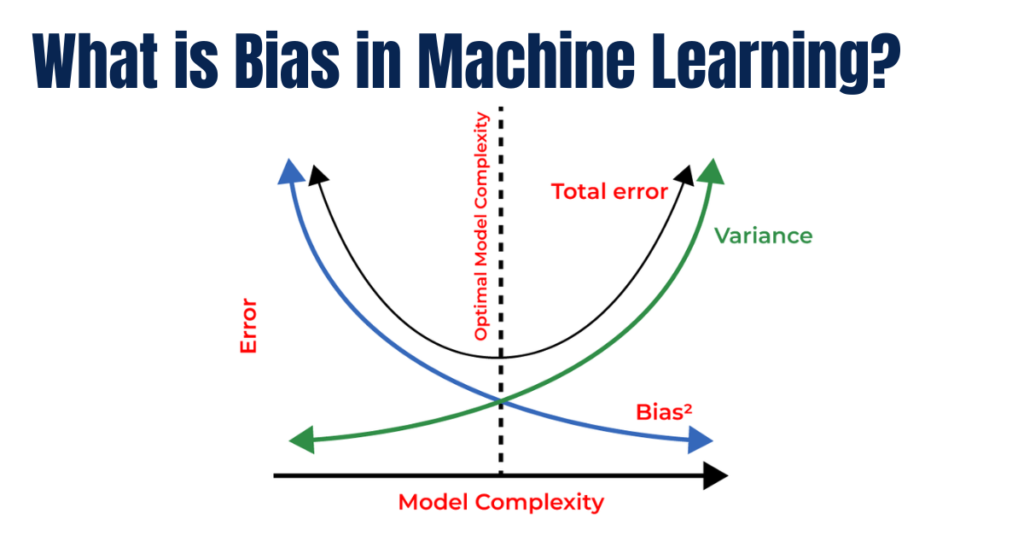

In essence, bias and variance are two sides of the same coin in machine learning: they both deal with mistakes but come from opposite directions.

Calculate bias and variance

Calculating bias and variance helps evaluate the performance of machine learning models. Bias is the difference between average predictions and true values, while variance measures how much predictions vary from their actual value.

These concepts are essential because a high bias can cause underfitting, leading to inaccurate predictions, whereas high variance may result in overfitting, causing the model to perform well on training data but poorly on new data.

To calculate bias and variance accurately, one commonly used method is through cross-validation techniques.

Understanding bias and variance enables you to recognize if your machine learning model suffers from underfitting or overfitting issues. By grasping these concepts thoroughly, you can implement strategies like regularization techniques and feature engineering to mitigate these challenges effectively.

Examples of bias and variance in machine learning

What is Bias in Machine Learning? Machine learning bias can manifest in various ways, including:

- Overfitting: This occurs when a model learns the training data too well, resulting in poor performance on new data.

- Underfitting: In contrast to overfitting, underfitting happens when a model is too simple to capture the underlying patterns in the data.

- Dataset bias: When training data does not accurately represent the real-world scenarios, the model may exhibit biased behavior.

- Algorithmic bias: Certain algorithms may inherently favor specific groups or characteristics present in the training data.

- Labeling bias: Errors or biases in the labeling process of training data can lead to incorrect assumptions by the model.

- Historical bias: Incorporating historical data that reflects past biases and inequalities can perpetuate unfair predictions and decisions made by machine learning models.

- Feature selection bias: Inappropriate choices of input features for a model can lead to biased outcomes and inaccurate predictions.

- Feedback loops bias: Biased feedback from users or other systems can further reinforce existing biases within machine learning models.

Impact of Bias and Variance in Machine Learning Models

Bias and variance in machine learning models can lead to underfitting or overfitting, affecting the accuracy and performance of the models.

Underfitting and overfitting

Underfitting and overfitting are important concepts in machine learning. Underfitting occurs when a model is too simple to capture the underlying structure of the data, resulting in poor performance on both the training and test data.

On the other hand, overfitting happens when a model is overly complex and fits the training data too closely, leading to high accuracy on training data but poor performance on unseen test data.

It’s vital to find the right balance between underfitting and overfitting to ensure that your model generalizes well to new, unseen data.

Recognizing underfitting and overfitting is crucial in developing accurate machine learning models without bias or variance.

Effects on accuracy and performance

Bias and variance in machine learning can significantly impact the accuracy and performance of models. When bias is present, your model might consistently miss the mark, leading to inaccurate predictions.

This means that the outcomes produced by the algorithm may not reflect real-world data accurately, affecting its overall performance. Furthermore, when variance is high, your model might be overly sensitive to fluctuations in the training data, causing it to make less reliable predictions when presented with new data.

Understanding these effects is crucial for developing trustworthy and equitable AI systems.

Now let’s explore ways to reduce bias and variance in machine learning models.

Top Ways to Reduce Bias and Variance in Machine Learning

Reduce bias and variance in machine learning through regularization techniques and cross-validation.

Regularization techniques

To minimize bias and variance in machine learning models, you can employ regularization techniques. These methods help prevent overfitting by adding a penalty term to the model’s loss function, discouraging complex or extreme parameter weights.

By using techniques like L1 and L2 regularization, you can control the model’s complexity and reduce its sensitivity to small variations in the training data. This helps create more generalizable and reliable machine learning models that can make accurate predictions on new, unseen data.

Regularization techniques are essential for reducing bias and variance in machine learning. They provide a way to fine-tune model parameters effectively while preventing overfitting, ensuring that your ML models produce fair and unbiased results.

Cross-validation

To ensure your machine learning model performs well on new data, you can use cross-validation. This technique helps in evaluating the model’s performance and generalizability by splitting the dataset into subsets for training and testing.

Cross-validation allows you to assess how well the model will perform on unseen data, which is crucial for creating accurate and reliable machine learning models. By using this method, you can minimize bias and variance in your models while enhancing their predictive capability.

Implementing cross-validation is vital as it aids in reducing the chance of overfitting or underfitting your machine learning model. It also assists in optimizing parameters to achieve better performance without compromising accuracy.

The process involves iteratively training and testing the model across different subsets of data, providing a more comprehensive evaluation of its effectiveness. With cross-validation, you gain a deeper understanding of how well your model generalizes to new data, ultimately leading to more robust and trustworthy predictions.

Feature engineering

Now that you understand cross-validation, it’s important to know that feature engineering is another crucial aspect of reducing bias and variance in machine learning models. Feature engineering involves selecting and transforming the input data used by the model to improve its performance.

By carefully choosing and modifying the features, you can help the model learn more effectively from the data without introducing unnecessary bias or variance. For example, transforming categorical data into numerical representations or creating new features based on existing ones can enhance the model’s predictive accuracy while mitigating biases present in the original data.

Implementing effective feature engineering techniques is essential for developing fair and accurate machine learning models.

Remember, feature engineering plays a significant role in addressing bias and variance within machine learning models. It allows you to optimize the data used by your model, ensuring fairness and accuracy in its predictions.

By leveraging feature engineering techniques such as transformation and selection, you can minimize biases while improving overall model performance.

Conclusion – What is Bias in Machine Learning?

When developing machine learning models, it’s essential to understand what is bias and variance in machine learning. Recognizing and addressing bias is crucial in creating trustworthy and fair AI systems.

Bias can lead to inaccurate predictions, impacting the reliability of your model. By mitigating bias through techniques like regularization and cross-validation, you can enhance the ethical integrity of your AI systems.

Understand that reducing bias in machine learning is essential for ensuring equity and credibility in predictive analytics.

FAQs – What is Bias in Machine Learning?

1. Can you explain what bias in machine learning is?

Bias in machine learning refers to the tendency or predisposition of an artificial intelligence system to make decisions that are not fair, showing partiality or inequality.

2. How does discrimination and stereotyping relate to bias in machine learning?

When a machine learning model shows discrimination or stereotyping, it means there’s data bias. This unfairness can be due to nonobjectivity and partisanship in the data used for training the AI models.

3. What role does deep learning play in creating biased outputs?

Deep learning is a subset of artificial intelligence that learns from vast amounts of data. If this data contains biases such as inequality or partiality, then these biases may be learned by the deep learning systems.

4. Is fairness important when we talk about ethics in technology like ML?

Yes, fairness is critical for ethics in technology including predictive analytics and other aspects of ML (Machine Learning). It helps prevent any form of discrimination and ensures balanced outcomes from AI systems.

5. How can we address bias issues within Machine Learning?

Addressing bias involves careful data analysis before feeding it into our AI models; ensuring diversity and representation while avoiding stereotypes; maintaining transparency on how decisions are made; finally incorporating fairness principles into our Machine Learning practices.